DHO is Mostly Confused

Rants from Devon H. O'Dell

Building a Debugging Mindset

I spoke at QConSF last week about debugging. The content is largely similar to my previous blog posts on the topic, but I think more refined. I’m unsure as to when the video of the talk will be made available on InfoQ’s website. If you attended QConSF, you have early access to watch the video. However, since that may be some time yet, I figured I’d put the slides here with a rough transcript.

I love speaking and sharing knowledge. Every time I do it, I get a bit nervous in the minutes before I present. I opened this one up with a favorite joke of mine to break the ice:

An ion walks into a bar. It goes up to the bartender and says, “Hey, excuse me, I think I left an electron here last night.” The bartender asks, “Are you sure?” And the ion replies, “I’m positive!”

Today, I’m really excited to share with you a learning journey I undertook. I wanted to know why some people seem better than others at debugging, which sort of lead me to the fundamental question of why debugging is hard. What makes it hard? And the underlying motivation here was that I wanted to see how this thing that we spend so much of our time doing, that seems so difficult, how we could make that process easier.

I’m actually going to front-roll some credits for this talk, so I want to thank Inés Sombra, Elaine Greenberg, Nathan Taylor, Erran Berger, and my wife Crystal O’Dell for sitting through this over multiple iterations. And also thank you for coming. There are some really awesome presentations that I know are going on during this time, so thanks for being here.

A bit about me. I’m Devon O’Dell, it’s really great to meet all of you!

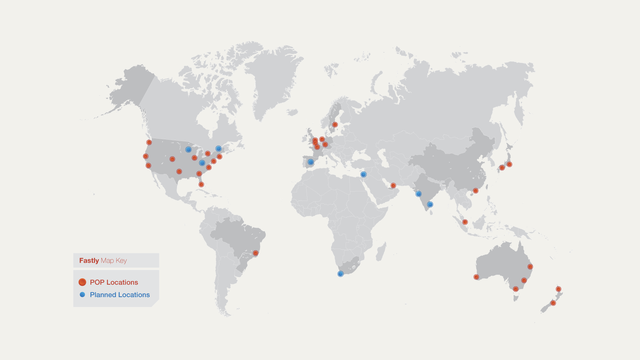

I work at Fastly; I’ve been there for about 4 years. We’re a content delivery network focused on near-real-time features like instant cache invalidation, streaming logs, live metrics, and instant configuration changes. We also provide web security and performance-related solutions for our customers.

Professionally, I started doing web development in 2000. I started mixing in systems programming (down at the kernel / hardware level) in about 2004. At Fastly, I work on our edge caching layer. I lead a team that works on performance and features. I also collaborate with other teams that build on top of the infrastructure I work on.

Now, performance and features are always really hard to get right the first time. So, inevitably, I spend a large amount of my time on debugging-related tasks.

And since I spend a lot of time debugging, I also spend a lot of time being confused. Debugging is hard because it’s confusing. And that’s really the thesis of this talk: debugging is hard.

This talk is roughly split into two acts. In the first act, I’m going to speak about why debugging is hard, and sort of finish off with what are some of the qualities that make some people inherently better at the task than others. And in the second, I’m going to discuss what things we can do to make everybody successful at debugging.

So: Act 1: Debugging is hard.

Over the years, I’ve asked folks what they think, why they think debugging is difficult, and there’s a real wide range of answers, right? Confusing interfaces, incomplete knowledge of systems, state space explosion, experience, just to name a few.

So let’s look at some of these, let’s take experience. Debugging is hard because it’s a skill, and experience ranges widely. But I know ridiculously senior engineers who seem basically “okay” at debugging. They’re not great, but they’re not bad. But I’ve also worked with novices who just seem to have a knack for it that’s sort of beyond their skill level, or what you would expect from their experience. And research shows, by the way, that there is a wide range of skill, even amongst experts, so this isn’t just me telling you an anecdote.

Experience really can’t be the only factor, so let’s look at another one.

Let’s look at confusing interfaces. I work primarily on systems stuff in C and POSIX environments these days. And for me, the mmap syscall is a really, really great example of a confusing interface. It has 6 arguments; I can never remember the order – because it’s not really logical, none of them sort of follow from the other. I don’t actually know any syscalls that have more arguments that I can think of off the top of my head.

One of the arguments is a bitmap of flags. Now POSIX specifies three or four, but on Linux, you have like twelve. Other systems have more or fewer.

Mmap can return at least seven different kinds of errors, and one those errors can happen for four completely separate reasons.

So mmap is hard because I can really never remember how to use it, how it fails, and this really means that I’m constantly referring to the manpage every time I need to use this interface. And when I have to debug it, you got it: back at the manpage.

So these aren’t really satisfying answers: experience or confusing interfaces. They don’t really give us the whole picture. And it seems like there’s something more fundamental going on about the difficulty. So to figure out what to do about the difficulty, I thought I needed to understand why it was difficult, fundamentally. So I kept looking, and I thought that some of the answer would lie in the history of software engineering.

So I went way, way, way, way back. In “Memoirs of a Computer Pioneer,” Maurice Wilkes talks about his experience programming the EDSAC (at Cambridge, I believe) in June of 1949. And now, at this time, nearly nothing about software development was obvious. And at one point, Wilkes relayed this powerful realization he had that he was going to spend a large portion of the remainder of his life finding errors in his own programs.

Now, debugging is hard because it’s not obvious. If we think back to when we first started programming, it wasn’t ever obvious that it was going to be hard to figure out what went wrong, or even that things would go wrong in the first place. Programs were just these sort of magical things that mostly worked. And when they didn’t, we thought it was our fault.

Bugs also have a connotation of failure. And Wilkes, in the full context of his quote, sort of gives a hat tip to this. I think this connotation has sort of led us to a culture of half-joking about how we make terrible decisions about the design and implementation of systems and interfaces.

Debugging is hard because we’ve created a self-deprecating culture around it. So nobody actually wants to do it. And I think that’s tragic, because as other people in this track before me have said, we learn from failure. So every failure is at least a partial success.

Ok, so let’s fast-forward twenty-five years. In 1974, Brian Kernighan and P.J. Plauger publish the book “The Elements of Programming Style.”

And in this book, Kernighan introduces this quote that I think many of you may be familiar with:

Everyone knows that debugging is twice as hard as writing a program in the first place. So if you’re as clever as you can be when you write it, how will you ever debug it?

Now taken at face value, this is pure rhetoric. All it says is that debugging is hard and we’re not clever enough to debug our cleverest solutions. And this is actually how I see this quote used most often in context: as a joke, in jest. Again with the self-deprecation.

But I think it says something more. What if we looked at this question as a challenge?

This leads me to much more fundamental questions about the conjecture and the premise. Is debugging really twice as hard as programming? If so, why? And how will we ever debug these programs?

Let’s just take as a given that debugging is twice as hard as programming. Where might we learn more information about how to apply that practically?

One place I found answers is an area of positive psychology pioneered by Mihaly Csikszentmihalyi; the concept of Flow. We all intuitively know what Flow is, it’s this feeling of being “in the zone.” Everything else is shut out and you just get stuff done. You look up at the clock, hours have passed. You just feel good. We feel our most accomplished, our most productive, and our most fulfilled in our jobs or the activities we’re engaging in when we’re operating in this Flow state.

Now, “Flow” is actually the technical term for this, even though it sounds a little “watery.”

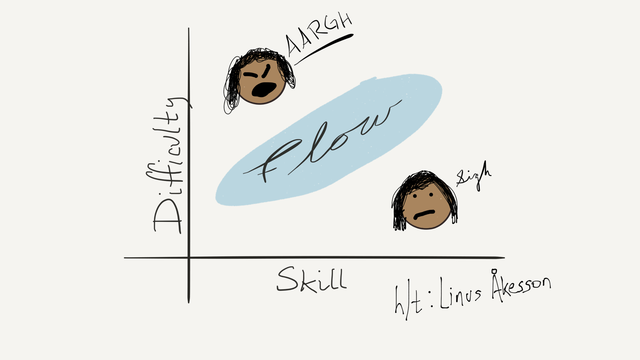

I do want to say that tying Flow to debugging isn’t an original idea of mine. I got the idea from Linus Åkesson, who wrote a blog post specifically tying it to Kernighan’s quote.

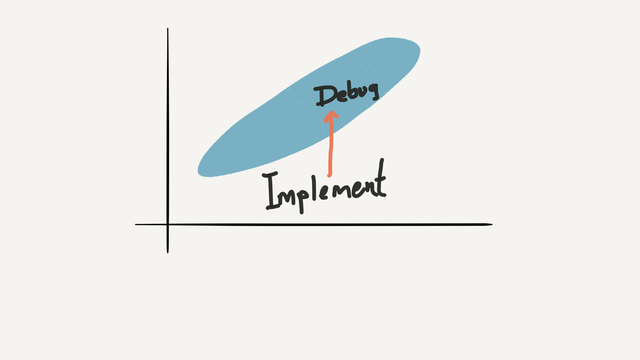

But basically, let’s say we’re debugging a problem. We can plot that task on this graph based on the bug’s difficulty versus our debugging skill. There’s this area in the middle that represents our Flow state, and this is where we’re going to be our most effective and most engaged in solving the problem.

However, if the bug falls below that Flow state, what happens is we feel bored or apathetic. Maybe in some cases relaxed, but we’re never really engaged in solving the problem. Now, if some task is too hard, it’s above our Flow state, and we might get frustrated or worried or anxious solving the problem. You might not be able to hit a deadline or something.

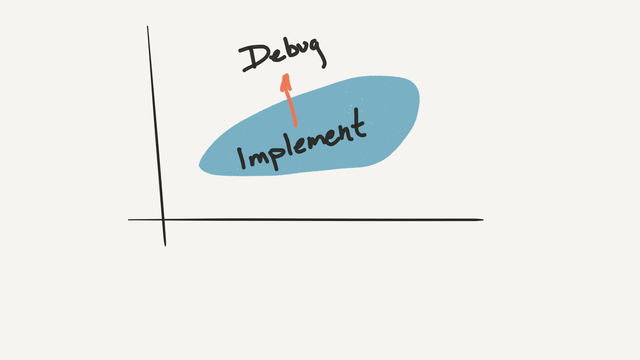

Practically, this actually gives us a choice. We can a priori optimize our experience either for debugging or for implementation.

So if we implement below our Flow state and debugging is twice as hard, we’re optimizing to be able to debug in Flow. However, this means we’re going to be bored writing our code, because we’re not that engaged.

If, on the other hand, we implement in Flow state, we get frustrated and anxious debugging outside of our skill.

So what choice do we make here? Most studies I’ve read seem to find 50% of the time spent in the software development lifecycle is on debugging-related tasks. I’ve actually seen studies that state as high as 80%. So if we’re spending an absolute minimum of half of our time debugging, you might think we should optimize for debugging in Flow. Implementing below Flow.

But I want to challenge that here; I want to encourage implementation in Flow. And one simple, but absolutely crucial reason for this is that to gain skill in an activity, we have to practice at the edge of our ability.

So for example, I’ve played guitar for twenty-five years. And I suck.

And fundamentally, this is because I rarely challenge myself. I learn some song or – the coolest thing I ever learned was some sweep picking pattern, and I can’t even do it very fast. But I rarely challenge myself to do something new. We don’t get better at guitar by playing one chord or plucking one string time after time after time. In fact, I don’t think that would even help us master that chord because you’ve got to do transitions in and out of it, et cetera.

So to gain skill, we really have to practice at the edge of our ability.

For debugging, what this means is that we have to learn something new. And since learning involves teaching to some extent, I thought I might find some answers to my questions in the area of the pedagogical aspects of debugging.

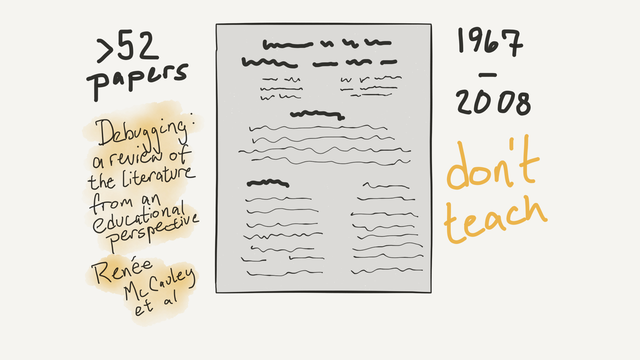

Debugging actually turns out to be hard because we don’t teach it. There are lectures embedded into some courseware that I’ve seen, but as far as I’m aware, no universities are actually teaching full courses on the subject. I think that needs to change. I think industry is actually in a place to ask for that change from academia.

Even so, there’s been plenty of research into teaching it. My hands-down favorite paper in this area is a 2008 literature review from Renée McCauley and like six other folks. And what it does is survey fifty-two papers from 1967 to 2008 and its trying to gather – sort of collate – all the information on how debugging is taught, what’s working, and what’s not.

Most of these papers end up being about the classification. So the who, the what, the when, the where, the why of bugs. And what I was really interested in, the how. Right? How do we solve it?

Unfortunately, after reading tens of papers, nothing I read actually had conclusive, positive results that we can help people get better at debugging through teaching it. So. Back to asking questions.

And why not go to the root cause? Why do bugs happen? We know specific reasons: off-by-one errors, race conditions, buffer overflows, whatever. But these are actually just classifications of related types of failure. They’re not explanatory. And the key reason that I love this paper is that it goes through the literature and gives us a single, generalized, and I really think correct answer about why bugs occur.

It says that bugs occur due to “chains of cognitive breakdowns that are formed over the course of programming activity.” That sounds ominous, right? But what does it actually mean?

So it means that debugging is hard because we don’t know, and we can’t possibly know everything. For any moderately complex system, we can’t possibly know all of it. So we create mental models about how the system behaves. And these are effectively assumptions and the goal is to provide a foundation to us to accurately reason about the behavior of these systems as we execute them, as we make changes, and as we debug them.

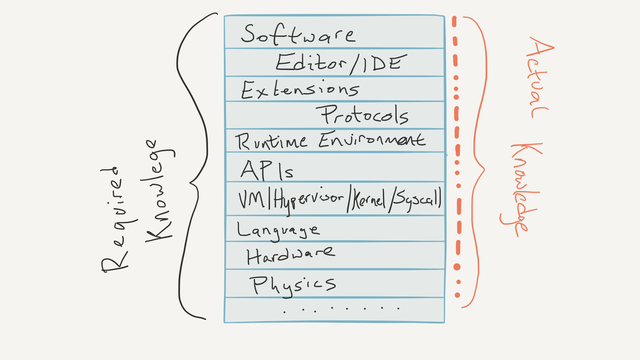

We actually need to do this even for the simplest software. We take it for granted because of experience, I think, but really think. Just take a second to really think about everything that has to happen under the hood for “Hello, World!” to work in any environment.

Our gaps in knowledge can lie anywhere from our editor, our compiler, our runtime environment. Our filesystem. Kernel. Hardware. Physics, if we’re dealing with latency-sensitive applications. Debugging is hard because we have so many opportunities to lack knowledge, even for very fundamentally simple problems.

And I think this answer, that we necessarily lack knowledge really gets to the core of why debugging is hard. I think it actually shows that Kernighan is right, that it is actually harder than programming.

If you don’t agree, think of it this way: writing software requires us to use existing knowledge to solve a problem. If we’re writing new software, we may have to learn something new, but we typically front-load this into our process with technical design, reviewing API documentation, et cetera. That happens before we really engage in writing full systems in code.

Debugging on the other hand requires us to gain new knowledge to solve a problem that we already thought was solved correctly. And this is harder because we by definition don’t know where we went wrong. And so, I think this roughly proves Kernighan’s conjecture.

Now, I mentioned none of the research had conclusively positive results in how we teach debugging in order to help people get better at it. And maybe that’s why we don’t teach it: we don’t know how. But in that 2008 paper, it was recommended that future studies into teaching debugging look into some work from Carol Dweck. She published a book called “Self-Theories” in 1999, there’s also a sort of pop-psych version called “Mindsets” that some people may be more familiar with.

Anyway, this 2008 paper suggests we might find some promising results there. And I do want to note that a later paper – or some later papers – have actually seen successes in teaching debugging, incorporating this stuff, which is why I got interested in it.

So Dweck’s work includes over 40 years of what I think is just absolutely fascinating scientific research that unfortunately I don’t have time to cover in detail. But if this sort of hooks you a little bit, I will talk about it for years. If you read one reference from this talk, and at the end I have reference slides in case you’re interested, I do recommend reading this book. It’s not that long.

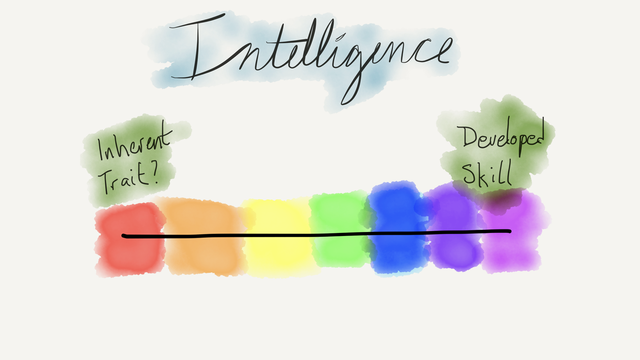

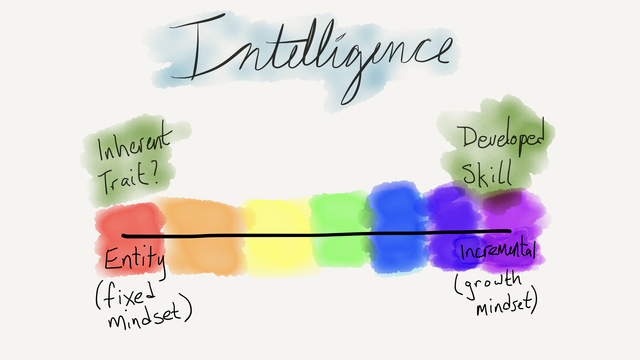

In a nutshell, her work says that an individual’s view of intelligence falls on a spectrum – somewhere on a spectrum – of intelligence being an inherent trait versus a developed skill. And actually where a person’s view falls on this spectrum says quite a lot about how they solve problems and their motivations for solving problems.

So what does intelligence have to do with debugging?

Debugging at its core is all about solving problems. Since this research addresses how and why folks are actually doing that, I think it turns out to be a really great proxy for talking about debugging.

People who have an idea that intelligence is an inherent trait, you can’t do much to change it, you’re sort of born with it have a fixed mindset. And people who think it’s a skill, it can be developed, it’s malleable, you can grow in your intelligence, are said to have a growth mindset.

And it’s specifically the traits and the motivations and the behaviors of people operating on one or the other side of this spectrum that makes this relevant to debugging.

Debugging is hard in a fixed mindset. If you can’t change much about your skill at debugging, why try? So fixed-mindset individuals don’t tend to take on challenging problems. And this also means that fixed-mindset individuals stagnate in their skill. If you remember from earlier when we were learning about Flow and debugging, to get better at a skill, we have to practice at the edge of our ability, and fixed mindset individuals avoid this, because they don’t take on the challenging problems.

People operating in a fixed mindset are also more likely to cheat. Now, that sounds very academic, how do you cheat writing software? Well, think for example of folks who cargo-cult some code off of StackOverflow and put it in because it answered some question they were asking about how to do a thing in their software. They paste it in without understanding the code, the edge conditions, how it’s designed to work within a system, and maybe they don’t even give attribution. So now when there’s a gap in their understanding (which is I would assert a complete gap because otherwise they would have just written the code themselves), they can’t even figure out where that solution came from.

So why would anybody do any of this? The fixed-mindset individual is motivated primarily by appearing smart. This means they tend to compete with others in collaborative situations, like pair programming. This makes debugging more difficult because they don’t do this collaboration, they don’t ask questions, they’re trying to outperform. Because appearing smart is a motivator for them, they see failure as a failure. They don’t see it as an opportunity to learn. And to make matters even worse, failure will actually negatively their future performance when they’re working on problems that fall within their concrete, foundational skill level.

The growth mindset is basically the opposite of all of this. Growth-oriented individuals are fundamentally motivated by hard work and tackling challenging problems. And this is because they see it as a way to gain skill.

They work well with others in collaborative situations; they’re likely to ask questions therefore. They’re usually enthusiastic to impart their knowledge and help mentor others because they also learn through teaching.

Debugging is hard, but all of these traits make the task easier for the growth-oriented individual. And this gives us some insight as to why some people seem to be better at it than others. It’s as simple and fundamental as their motivations for solving problems.

So that’s a lot of information.

When I got to this point in my research I had a ton of questions. I still hadn’t learned anything about how we get folks interested in practicing at the edge of their ability. Can we move people from fixed to growth mindsets? How can we effectively develop our teams to solve the problems that they’re always facing?

So now we’re in act 2, and we’re gonna explore some techniques we can use as mentors and as individuals to improve our skill and to make the technique of debugging – the experience of debugging – less painful for everybody. I want to note that while I’m going to tie these techniques specifically to debugging, I think they all generalize very nicely; we can use them in other areas of our lives as well.

Creating growth-oriented teams starts at hiring.

When we’re looking at resumes, provide some additional screening for people who are clearly touting credentials. Who focus on individual achievements without trying to tie this to the global benefit of the solutions they provided. These folks are more likely to operate in a fixed mindset.

People who like to explain in detail how they worked hard to solve a problem, what’s challenging them right now, what they’re learning: these are folks who are more likely to have growth-oriented thoughts towards work and debugging.

And so in interviews now, I ask, “What are you working on or learning right now that’s really challinging you, that you’re finding difficult?” Fixed-mindset people don’t tend to have a compelling answer! They don’t want to admit there’s something that they don’t know. Now, growth folks will answer this question forever, so you need to time-box it.

I do really, really, really, really specifically want to note that we shouldn’t exclude fixed-mindset individuals from hire. There are a number of super-compelling reasons around diversity and what types of folks are more likely to develop a fixed mindset (specifically women and minorities). This is sort of culturally enforced, but I don’t want to get too much into that so I’ll talk about that more afterwards. But just as good a reason is that we can actually promote folks into a growth mindset.

This can be as simple as changing the words we use when communicating with people. Dweck’s research actually repeatedly shows that praising people for being smart results – reinforces a fixed mindset and all the negative that comes with it. Praising hard work, on the other hand, reinforces the motivation to work hard. It recognizes the effort put into work, and it promotes a shift to a growth mindset for someone who’s not there already.

When we praise folks for being smart, we’re actually robbing them of the recognition of the time and effort they spent solving problems. So the next time your team member solves a particularly hairy bug, praise them for their hard work. For their effort. And if someone calls you a genius for doing the same, deflect that assessment. Because they way you respond can help move that person away from their fixed view of your ability into a growth-oriented view. So you can reply with something like, “Thanks for the compliment! I’ve actually just done this kind of thing a lot.” Or, “I have a lot of experience in this area.”

Once we’ve created and promoted growth-oriented teams, we need to nurture and support their success. And one way to do this is by encouraging curiosity. Encouraging learning. When mentoring folks, we also need to be curious. What does a mentor have to be curious about?

So for example, I was explaining issues of debugging concurrent systems to a team member recently and used some metaphor (it doesn’t really matter what) to describe how concurrent processes interact with shared mutable state. My colleague reflected a completely different metaphor back at me. My first hunch was to toss it aside, because it didn’t immediately make sense to me. It wasn’t how I was internalizing the view of this system. So I wanted to correct this person.

But after some further thought, I realized that the new metaphor was perfectly fine, it just wasn’t what I was using. And this is what I mean by being curious: be open to new ideas, to new solutions, to new methods of actually thinking about things.

Because there’s many paths to get to knowledge. Our own intrinsic views aren’t the best for everybody. People want to base new knowledge on existing knowledge. It provides them an already-understood foundation on which to build. So being curious is really about understanding where your mentee or your colleagues are coming from.

If we’re encouraging curiosity, we should expect to answer questions; we should ask questions. Asking questions about someone’s past experiences and solutions to problems is a really effective way to learn. And when I was first learning to debug, I didn’t even know what to ask. So I started with, “How do you debug?”

And one frequent answer I’d get was, “Oh, well, debugging’s more of an art than a science.” Is that true?

Uh… debugging is part of computer science, which is science, and computer art is something very different. So I think this is a B.S. answer.

I think the reason that we think debugging is an art is actually a privilege of experience. As we gain experience, we forget what it was like to learn a thing, and we forget even more the more experience we gain. Giving this answer tacitly allows us to avoid remembering. It acts as a scapegoat for not actually having to teach anything to anybody. Finally, this answer also reinforces the idea that debugging is not actually a science.

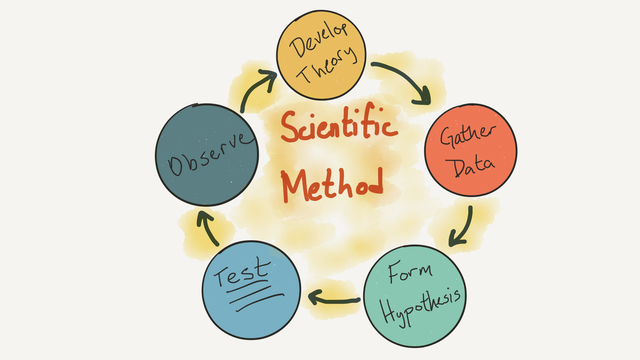

And that’s not great because the scientific method is a collection of techniques specifically designed for the acquisition of new knowledge. Which is exactly what we need for a task that requires us to gain new knowledge.

As a quick review: we observe a problem, we develop a general theory about the problem. We ask some questions, gather some data about that theory. We form a hypothesis, we test the hypothesis. Now science is in this sort of regard a Bloom filter. Because if our tests succeed, we might be right, and if they fail, we’re definitely wrong about something.

But in practice, I notice that we skip steps.

So for example, let’s say our software crashed, and we look at memory, and it’s garbage. And we say, “Ah-ha-ha-ha! This obvious memory corruption.” And we start looking for and testing that.

But that’s not a hypothesis, it’s a general theory. And because it’s a general theory, it’s also wrong sometimes. And so we end up calling it a heisenbug because we’re uncertain when it happens, even though the actual problem is that we don’t understand why it happens.

The root cause here is that we skipped some of the steps. We skipped the steps of creating and testing a hypothesis.

Furthermore, memory corruption is usually a symptom of something else, at least in my area. Something like wrong buffer sizes, off-by-one bugs, wild pointers, use-after-free, race conditions, any number of other things could actually be a cause for corrupted memory.

So we end up debugging symptoms instead of causes. And if we were looking for something specific, like uninialized variable or an off-by-one instead of “obvious memory corruption,” the heisenbug effectively ceases to be so uncertain.

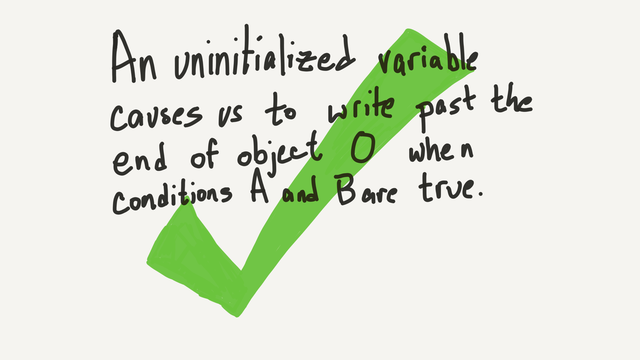

We can avoid all of this simply by creating and testing against a real hypothesis. Now, a hypothesis is a testable and falsifiable statement like, “An uninitialized variable causes us to write past the end of some object, corrupting adjacent memory under some very specific conditions.”

Reproducing the problem with tests is a crucial part of the scientific method as applied to debugging. This is how we test our hypothesis; we need to reproduce issues. Following this process not only makes debugging less frustrating, it actually makes it easier the next time around because all of a sudden, you can intuit causes from your new experience.

That’s all well and good. But sometimes we don’t actually have enough information to ask intelligent questions about the data that we see to help us form a hypothesis. So what do we do then? We might not even know the causes for memory corruption.

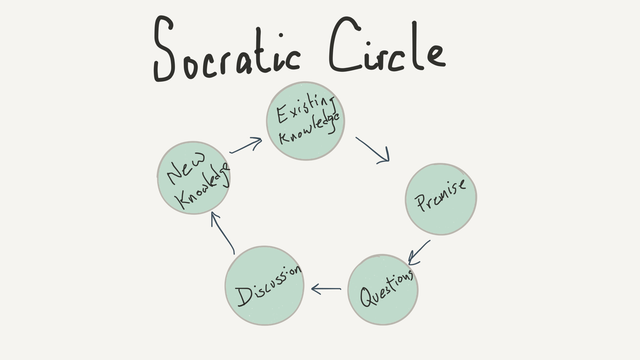

The Socratic method is a fantastic way to build understanding in a collaborative fashion. It works hand-in-hand with the scientific method because its goal is to identify and eliminate hypotheses that lead to contradictions. And it’s based on the idea that people build new knowledge from existing knowledge, so it explicitly promotes curiosity.

The circular flowchart I’ve drawn here is an illustration of how a group process called “the Socratic circle” is designed to work. Starting from some existing knowledge, a premise is introduced by the person who is aiming to learn. A mentor then asks questions about this premise, and a discussion ensues. The mentor is guiding the discussion through questions, not through providing answers. The mentee is the one providing answers and making statements about the questions.

This can lead to new questions, at which point we repeat the process, but it’s repeated until some new knowledge is formed… at which point that becomes existing knowledge, and we can repeat with new premises.

A really key benefit here is it allows a mentee to discover knowledge, to really own it. Isn’t it really great to feel like you’ve discovered something new?

It’s so much better than this typical, learn-by-rote method of teaching that we all sort of think of as teaching, where all glory belongs to the teacher, to the mentor. Owning new knowledge feels really great for the person who is learning, and it absolutely helps to build and solidify strong teams.

The next two techniques I’d like to share with you, I learned from Allison Kaptur. Active recall – I think she calls it something different, I don’t remember what she calls it; I call it active recall – it’s the process of actively trying to remember the answer to a thing that you don’t think you know. Flash cards. We all think they suck, but maybe we’re just not using them properly.

For example, as applied to something real, remember at the very beginning of this talk, I was ranting about how mmap is confusing and I’m always looking at the manpage? Well, instead of looking at the manpage, I can use active recall. And what this means is that I try my hardest to write the line of code to consume the interface and its errors. (Or lines, if I’m consuming the errors as well.) I just do that to the best of my ability. As I think it works.

And then regardless of whether I’m right or wrong, I can check my answer, because I have a manpage that tells me. So I can actively work on trying to remember what the answer to a thing is before I do the checking. It’s completely harmless. I wouldn’t necessarily recommend doing this in production, but it’s harmless like 99% of the time or more.

In addition to being a really excellent personal exercise, we can also practice this with others on our teams. It fits in really nicely with the Socratic method. So for example, the next time somebody comes up with a bug and they say, “Hey, how did this happen,” you can say, “Well, what’s your understanding of the bug right now?” This engages in a Socratic dialogue and forces them to recall their knowledge of the system and the conditions of this bug and what they think.

Another technique I learned from Allison is spaced practice. The idea here is that when we learn new information, our brains need time to process it, or sort it, or whatever makes sense for you to visualize how we retain information. If we spend too much time adding new, related information (like in a 40 minute talk), our brains don’t have time to do the background processing they need to really let that information sink in. I’m not a neuroscientist, so apologies, that hand-wavy thing is about as good as I’m going to get here.

But spaced practice is the idea of switching between different tasks to allow this processing to occur. Different unrelated tasks.

So for example, think of the last time you spent and entire afternoon staring at a debugger. It’s really easy for us to get engaged in these sort of sunk-cost fallacies about our work, where we spend additional time – more and more and more time – debugging despite diminishing returns for our effort.

If we put the problem away for a few hours or overnight, frequently we have a workable solution the next time we come back to it; I think we all identify with this.

What we can do about this is to have one or two additional items to switch to through the course of the day. Do go eat lunch. Do take breaks. Go home. Go to sleep. Give yourself the personal resources that you need to finish the challenge, and really encourage your team members to do the same thing.

Especially if you’re in a managerial role: it’s extremely important because most people won’t ask for something from you. It’s extremely important for you to explicitly allow people to just let something go for a little while. Especially when they’ve worked really hard for a really long time and they have basically nothing to show for it.

What spaced practice doesn’t mean is putting problems to the side and giving up when you encounter a challenging problem. Persevere through difficulties. Challenges are opportunities to learn something new, to gain skill. If you need help, ask for it and be available to others who need your help. Help them persevere by giving them effort-based encouragement. By engaging in Socratic exercises to solve their issue. And when appropriate, by givin them space to let something go for a while, give them something else to work on.

And maybe even something easier. That’s not really promoting a fixed mindset, because what it does is it gives them a win. And after a really big failure, even a small win makes a huge difference psychologically to people.

So I think we’ve seen that debugging is fundamentally more difficult than programming. There are things we can do to make it less awful. And I think if we go down to it, the change needs to start in our culture.

Engineering culture has this sort of romance with the idea of “smart.” It starts with our language, we praise people for their “genius” or their “intelligence” instead of their effort, or the outcome. But our jobs are all fundamentally effort-driven.

We engage in fixed-mindset assessments of our peers. We engage in activities like hero worship: “Linus Torvalds is a god” (regardless of how you feel about him). Despite that we do this, our heroes are actually pretty similar to us. And if they have a skill that we don’t, we can get to be like them with just a little bit of effort and education.

Our culture doesn’t sometimes promote the tools we need to stay in our Flow state. Or to develop a growth mindset. We convince ourselves and others that deadlines mean that we need to discard processes like the scientific and Socratic methods, despite the fact that the time you spend using them actually saves you time in the long run.

We interrupt people with pop-up notifications in Slack, with trivial things that could have been solved better in email. Our culture doesn’t do a lot to promote the soft skills that are required to really be effective at debugging, and as I would also argue, anything to do with actually programming.

So let’s change this.

Regardless of whether you’re a manager, a mentor, a lead, an architect, an individual contributor, recognize and praise people for their effort. Congratulate hard work, not genius. Recognize and promote the idea that failure is not negative, it’s part of the learning process.

Be curious, be scientific in your work. Encourage learning and skill development, whether that’s through the Socratic method or curiosity. Through active recall. Or through sending folks, sending your employees, to conferences like this one where they can learn something new.

Give yourself time to digest information by taking breaks. Don’t interrupt people, don’t interrupt yourself with trivialities. Teach folks from what they know, not from what you know.

In closing, I want to re-assess Kernighan’s conjecture and his question.

Everyone knows that debugging is twice as hard as writing a program in the first place. So if you’re as clever as you can be when you write it, how will you ever debug it?

We’ve learned today that there’s no absolute, objective difficulty to debugging. We also now know that Kernighan is correct: debugging the most difficult problems – the hardest problems – is always going to be harder than writing our cleverest code. But by adopting these processes, by adopting and promoting a growth mindset – the debugging mindset – we can now definitively answer his question of how we will do it.

Thank you.

Here are my references for those of you who care. I have 12 minutes for questions if anyone has any.

Hey. Great talk, you mentioned this earlier in your talk and I was curious to know why you think minorities, in particular females, would fit more into a fixed mindset, and what is encouraging that behavior.

Yep! So this actually comes from another – the first paper I read trying to tie Dweck’s stuff to computer science. And at least in the United States this is true, but I think our culture is similar enough to other Western European cultures – and just sort of patriarchal, right?

And so what’s actually true is that high IQ girls in elementary school – so up through grade five – outperform every other group. But because of sort of socially and culturally imposed ideas like “women should be pretty, not smart” or “best to be seen and not heard,” these things are reinforced. And these things actively promote a fixed mindset.

And so, because of these cultural pressures, women are more likely to develop a fixed mindset. What you see is these high performing girls in fifth grade, all of a sudden they get to middle school and their grades go down a cliff.

The idea behind what they were saying in this paper was that this even goes as far as to explain why in computer science (and maybe STEM in general) you even see the discrepancy in gender balance in the enrollment level. Women don’t enroll in C.S. as much as men. And they tie that back to this because everything about computer science, and especially debugging, requires a growth oriented view.

So if you don’t have that, and you think you can’t solve these problems, and you’re engaging in a field that’s constantly asking you to solve something hard and outside of your skill level, you just don’t. You switch to something else.

So that’s where that comes from. I don’t think there’s anything inherently true that says it has to be that way. And this is where I’m coming from with starting the change in our culture.

So when you were talking about the Socratic method, how do you engage in the Socratic method without sliding into a patronizing tone, if you know what I mean?

Yeah, that’s really hard. So, to be honest, most of my experience with this is on IRC and so people are just going to take things how they take them. So in like help channels like ##c on Freenode, or #freebsd, or other channels that I’m involved in or have been.

I think it gets back to what some other folks in this track have said which is that it requires trust. If you have a good relationship with the person you’re involved with in this methond – or the people you’re involved with in this method, you end up having this trust. Trust that your questions are genuinely curiosity-driven.

Also one thing I didn’t really touch on is that it can feel patronizing, but it isn’t necessarily experienced that way. And one of the reasons we might be concerned about that is that these aren’t really behaviors we’re engaging in frequently with our colleagues or even with ourselves. But, getting again back to neuroscience, there’s neuroplasticity. The more frequently you engage in some behavior, the less weird it becomes.

And so I would suggest if you’re engaging in this exercise and you’re concerned about that, be up-front about what you’re doing, at the head. Say, “I’d like to try this activity with you… it might come across…” – and we can all sort of tell if we’re sounding like an ass, so just don’t sound like an ass, but, “I’m not trying to be condescending and I’m not trying to be patronizing, but I’d like to try this method with you, would you engage in it with me?”

And from there, I think you’ve kind of gotten rid of that sort of stigma, maybe.

Hi, so you talked about a couple of methods like taking breaks, working on a couple of different things to kind of give people that space to grow. What I’m curious about is in a very time-critical scenario – servers are going down, you’re hemmoraging value – what other approaches would you take, or how can we apply that in a way that sort of tightens that loop and keeps people focused.

That’s a really good question that I would have suggested you went to my colleague Lisa Phillips’ talk this morning on how we run our incident command. And actually, fundamentally, it follows a lot of these same principles. So she’s actually VP of our ops department and she’d be able to explain – I’m just a lowly engineer slash tech lead – whatever I am.

Provide support for people outside the context of what they’re doing. So we have incident commanders who delegate tasks, specific tasks, who consume information from folks, who ask people if they need bathroom breaks or rest breaks or food breaks – who will order food for them. It really gets back again to some of the bits of spaced practice where you need to really give yourself the personal space to honor your body and your mind. I mean, without sounding too granola, I hope.

But these are things that you physiologically need, and when you don’t get them, your performance diminishes. So it is always better, in fact I would argue you should be doing all of these things especially in those activities, because if you’re not, you’re more likely to F-up if you don’t have your needs met.

I have seen, and you can see, some developers are really good at attacking a problem in some way, and others may take a while. Is that aligned with because they are fixed mindset versus growth, or is it something just because of experience and an individual’s expertise?

The mindset research doesn’t say anything about actual skill at any point in time. It just talks about motivations and approaches. So I don’t think you could derive mindset from that. But there are questions you could ask about a person and about their view.

So fixed-mindset people will say things like, “I just…” and, “I can’t” and stuff like that, and so you can observe that through the way they communicate with you. I don’t think you can observe it through how they do their work, or how long it takes them.

You might be able to observe it in how they approach problems. Do they try the same thing frequently expecting a different result? That maybe gives a better idea.