DHO is Mostly Confused

Rants from Devon H. O'Dell

Debugging the Internet

Yesterday, we were doing some tests to try to ensure that some changes we had made fixed some problems we were seeing with particular request types. What we noticed was that occasionally, a request would sit around forever and could not be destroyed, despite the associated connection for that session being in CLOSE-WAIT. We had noticed this behavior before, but were entirely unclear what to chalk it up to, and it didn’t seem like that big of a problem.

Unfortunately for us, this time, such behavior could lead to some less than desirable results.

So we dug into the problem, and boy was it hairy. If we sent a request to a cache node from our office and canceled the request before it was completed, the request would sit around seemingly forever. I could not reproduce this behavior from my VPS, but we could reliably get a connection to stick from the office. (It should be noted that we use a Cisco ASA router thing at the office.)

We were trying to figure out what was going on, and since we were blessed with being able to reproduce the problem from the office, we set out doing just that. We grabbed a packet capture on the cache node to see what it thought was going on:

dho@cache-xxxxxxx$ sudo tcpdump -i any -s 0 -w office.pcap host i.p.x.y

We did the same with the request from my VPS. What we noticed was really odd:

while my VPS sent a FIN and then many RST packets, the office network sent

a FIN and a single RST.

But it did send a RST. And since we saw that on the cache node, how in the

world was the socket hanging around in CLOSE-WAIT?

Security.

If strictly adhering to TCP, the sequence number of a RST only has to be

within the window. However, this behavior lead to all sorts of denial of

service attacks where people were able to close random connections by spraying

relatively few forged RST packets. In response to such attacks, RFC5961

suggests to require the sequence number of a RST packet to exactly match the

next expected sequence number. Most modern OSes do this, including Linux;

disabling this behavior and opening up again to RST spray attacks isn’t

really an option either.

And the Cisco ASA sends the RST packet with a sequence that’s off-by-one,

and then just doesn’t bother managing the connection anymore. Jerks. In the

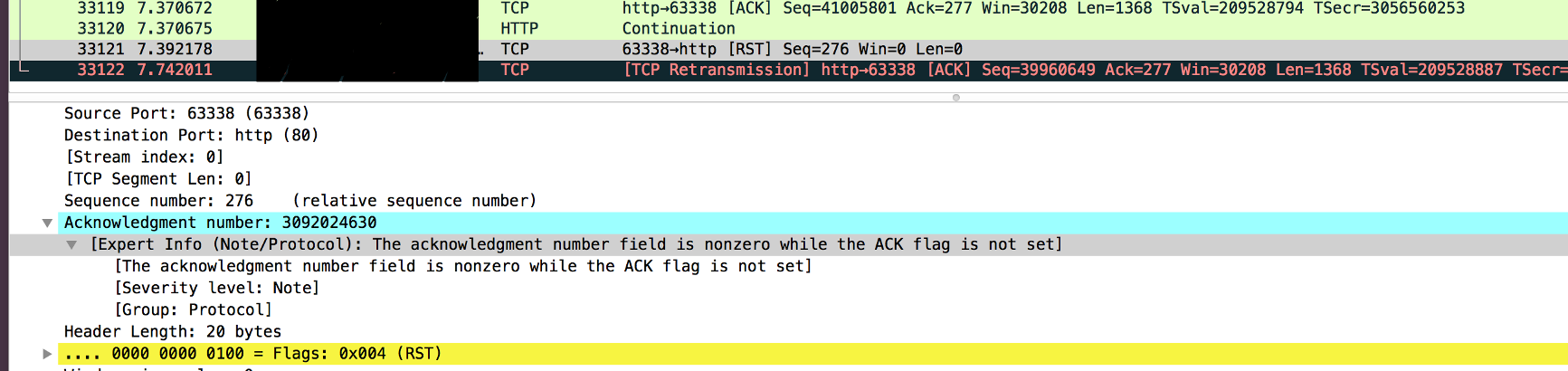

Wireshark capture below, we can see that the cache has acked a sequence 277

(which was the FIN packet, by the way), and the RST comes with sequence

number 276. We then begin retransmitting our ACK of the FIN.

What can we do about this?

Well, it turns out we had already done something about it. Though we use

blocking sockets, we do set SO_SNDTIMEO and SO_RCVTIMEO on them. The value

used was 600 seconds (or 10 minutes). This is the default value in the version

of Varnish we use, and we’ve never changed it.

Why would we want the value so high?

When caching large objects, Varnish attempts to make as few syscalls as

possible by filling in a struct iovec and using writev(2)

to send the data. This interface results in fewer syscalls, which generally

means lower overhead. In conjunction with SO_SNDTIMEO, however, it is

possible for writev(2) to return before having sent all its data. With a

sufficiently low value of SO_SNDTIMEO, we could increase the number of

system calls we do significantly.

Because we use blocking sockets with SO_SNDTIMEO, we have to check for short

writes. Another reason to set this value higher is because it returns in error

when no data from the buffer made it into the kernel’s send buffer during that

period. Varnish will restart sending when it does a short write, but will

abort if it writes nothing.

This means we have to be careful with how low we set the value, too. It turns out that connections that sat around forever did so because 10 minutes is a ton of time to make progress on a socket – and mobile networks are actually pretty aggressive about keeping connections open due to the flaky nature of wireless clients.

This all seems pretty straightforward in retrospect, but it took us a while to get to there. Part of this was probably because of assumptions: we assume that 600 seconds is a deliberately chosen value, we assumed that there was a bug in the code. Working with multiple people to debug anything is a fantastic idea. Eventually somebody stops assuming the same thing that everyone else is.

If there’s anything to learn from this it’s that collaboration is imperative when debugging. And that the Internet is hard.

Addendum 2015-07-13

Of course, it’s completely valid to continue writing to sockets in CLOSE-WAIT

state. At the socket level, we may call shutdown(fd, SHUT_WR); to signal that

we are done writing requests over our connection. We may still need to continue

receiving responses. Such sockets are considered “half-closed”, and this is an

entirely valid situation.

However, this behavior does present complexities with handling that situation.

At the end of the day, it comes down to how long you wait to time out while

writing to a socket. Indeed, write(2) and writev(2) block as long as the

kernel buffer is filled, so they aren’t actually making any guarantees about

progress. I imagine it is possible that a subsequent write(2) could fail

although connection progress was being made. I suspect this would be due to the

window size shrinking as bytes trickle out, with the kernel shrinking its

buffer at the same time.

Handling this situation is unlikely to be fruitful and results in the need for many system calls.

We’d benefit from a saner API.